Invariant Representation Learning in LLMs for Model Attribution

Under review

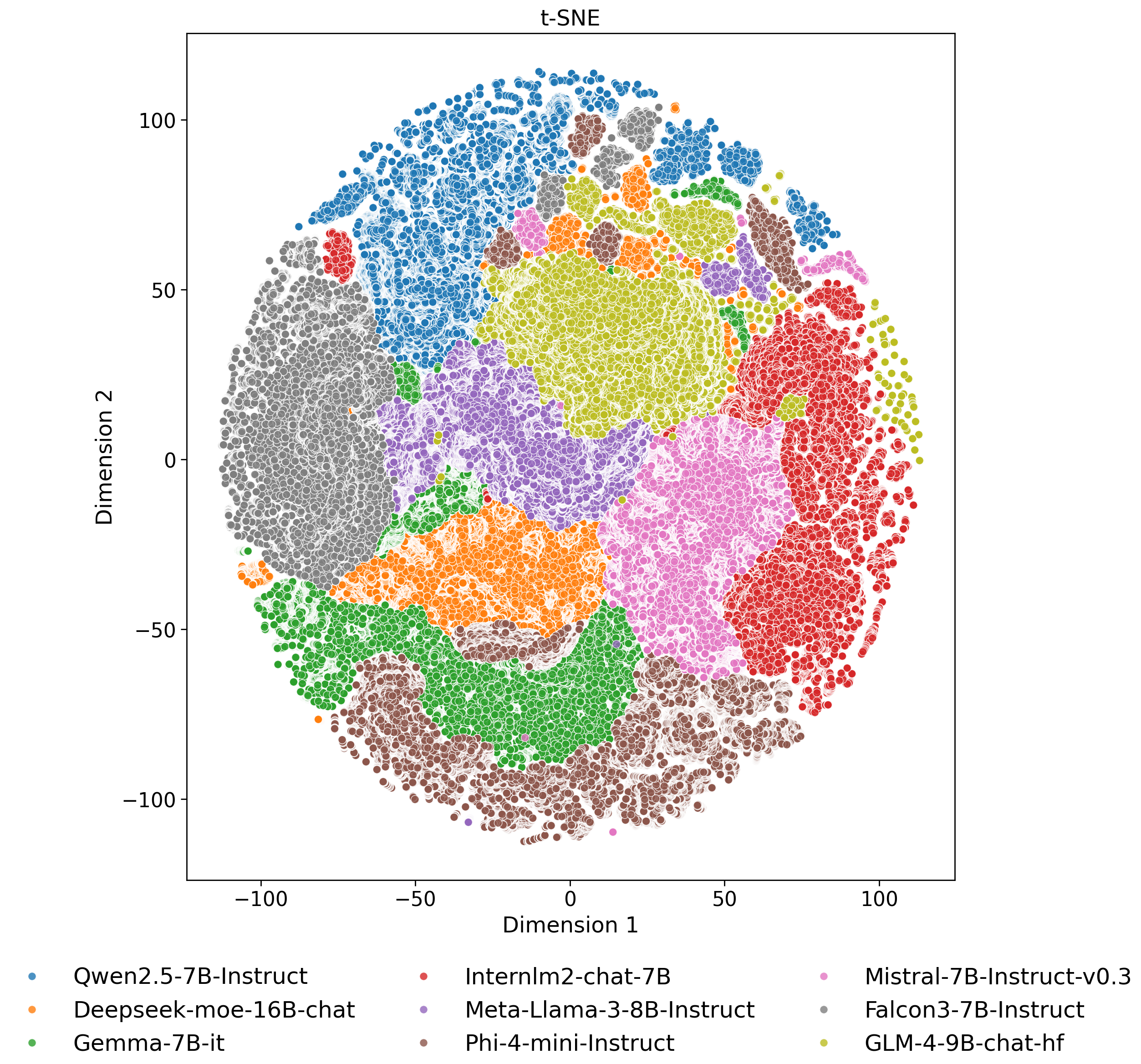

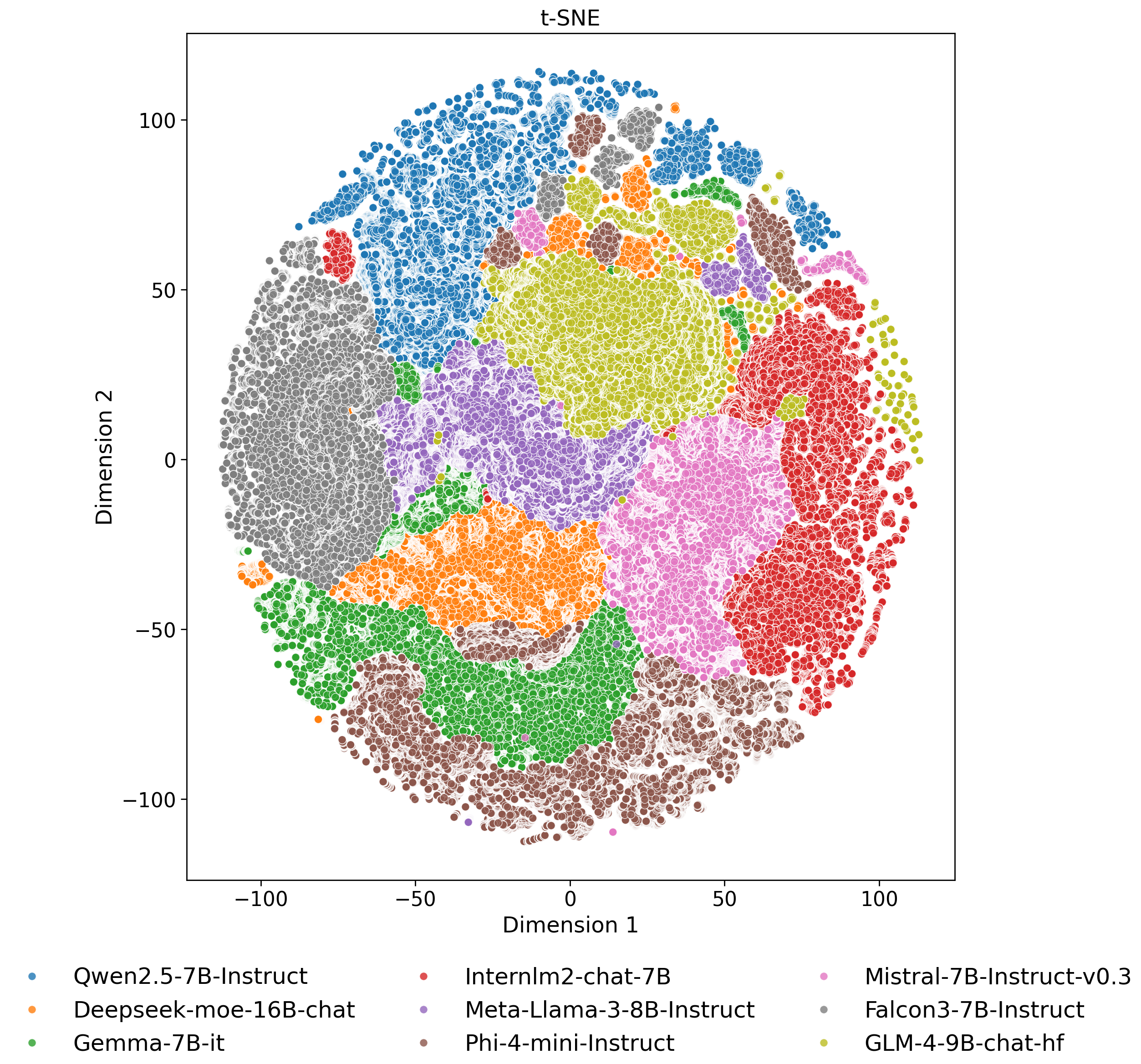

Layer-wise analysis framework for identifying paraphrase-stable latent representations in LLMs. Supports semantic clustering and model attribution tasks.

I study how models encode semantic meaning that remains stable under perturbations. My work spans robust representation learning for image watermarking, auto-augmentation, and identifying invariant latent features in LLMs for attribution and forensics.

I'm a doctoral researcher in Information Science & Technology at the University of Nebraska Omaha. My dissertation centers on invariant representation learning and its applications to robust image watermarking and LLM robustness.

Broadly, I design systems that remain stable under content-preserving transformations: geometric/photometric augmentations for images and lexical/structural paraphrases for text. I care about what an AI model knows versus how it encodes it.

Under review

Layer-wise analysis framework for identifying paraphrase-stable latent representations in LLMs. Supports semantic clustering and model attribution tasks.

Under review

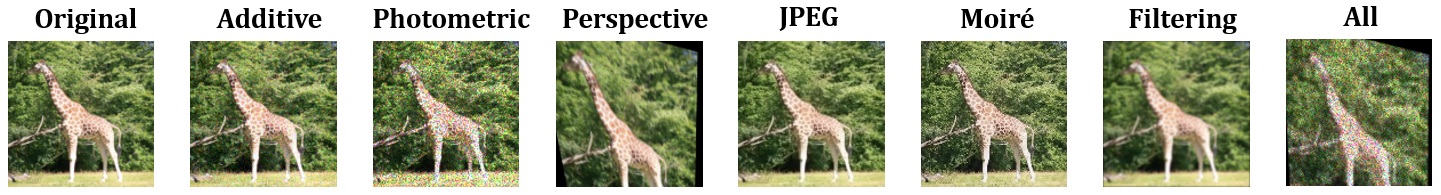

Differentiable augmentation framework that learns to adversarially perturb images to challenge encoders. Evaluates representation stability via classification and similarity-based objectives.

International Conf. on Computational Science & Computational Intelligence 2023

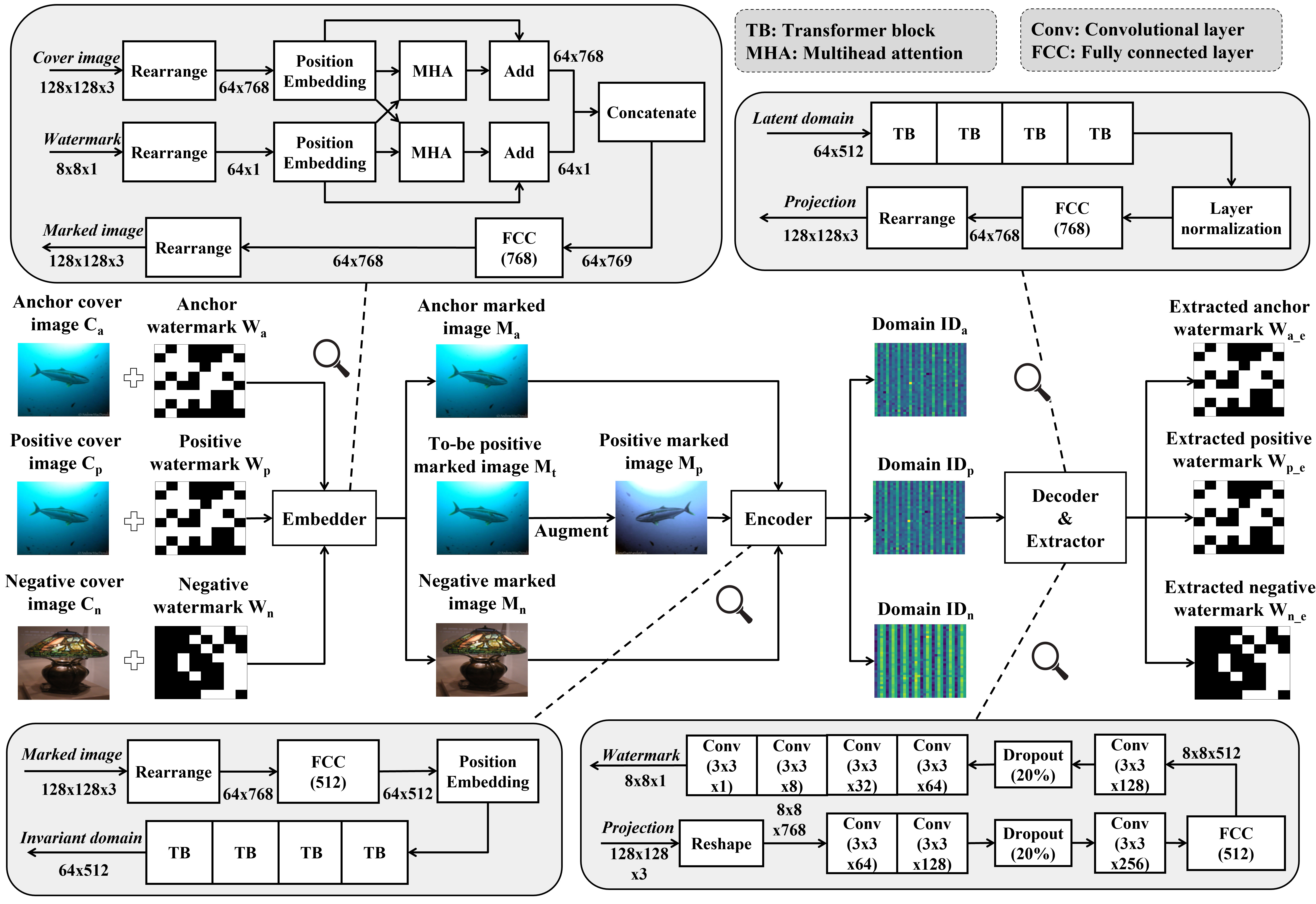

Watermark embedding and extraction method resilient to geometric and photometric attacks. Utilizes ViT-based cross-attention to align invariant domain features for robust watermark decoding. The figure above shows an overview of our proposed franework.

Links to papers in press will be updated as they become available.